OpenAI’s Superalignment team, charged with controlling the existential danger of a superhuman AI system, has reportedly been disbanded, according to Wired on Friday.

OpenAI launched its Superalignment team almost a year ago with the ultimate goal of controlling hypothetical super-intelligent AI systems and preventing them from turning against humans. Naturally, many people were concerned—why did a team like this need to exist in the first place?

Ilya Sutskever, OpenAI’s co-founder and chief scientist, announced he was leaving the company on Tuesday. OpenAI confirmed the departure in a press release.

As 2024 begins, there has been plenty of speculation about what lies ahead for artificial intelligence. AI was the hottest industry in the world last year and it will likely continue to be so throughout 2024—and maybe the rest of your godforsaken life. That said, many of the concerns about this startling new…

A survey of the leading AI scientists says there’s a 5% chance that AI will become uncontrollable and wipe

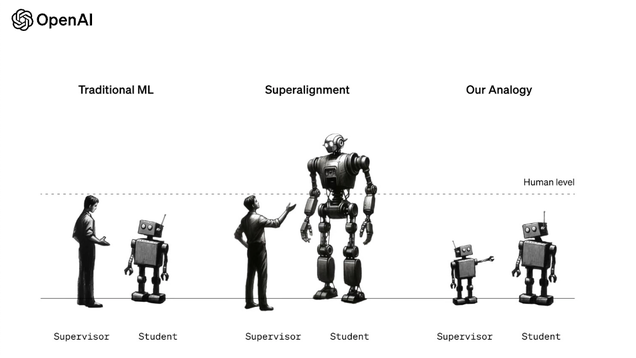

A new research paper out of OpenAI Thursday says a superhuman AI is coming, and the company is developing tools to ensure it doesn’t turn against humans.

Leaders and policymakers from around the globe will gather in London next week for the world’s first artificial intelligence safety summit.

A team of researchers from around the world, led by Ohio State University, will be gathering data to create artificial intelligence-informed models to better understand the long-term impact of the climate crisis on biodiversity.

Since ChatGPT’s release in late 2022, many news outlets have reported on the ethical threats posed by artificial intelligence.